In our previous article, Harnessing Generative AI for Software Development: Our Experiments with User Story Generation, we explored the innovative ways Propel Ventures leverages Generative AI to generateuser stories. Today, we delve into the next phase of our AI journey: the automation of UI code generation, a leap towards translating high-fidelity designs into functional code seamlessly.

Experiment One: Off the shelf Tools

Our first foray into AI-assisted code generation involved off the shelf tools such as CodeJet, Lofofy, and Builder.io. These platforms promise to convert Figma designs into usable code, ideally streamlining the development process. We embarked on this experiment with the hope of reducing the manual coding required to bring our UI designs to life.

The reality, however, was a mixed bag. While these tools could indeed translate a design into code, the "one and done" output frequently missed the mark. The generated code often needed significant reworking to meet our coding standards, and the rigidity of the output offered little room for easy adjustments. Moreover, the integration with our existing frameworks and libraries was far from seamless, requiring a manual, time-consuming process to ensure compatibility.

Back to the drawing board

Determined to find a more efficient solution, we reflected on our requirements. We needed a tool that minimised upfront work and better understood our design intent, allowing for integration with our chosen frameworks and libraries. With these criteria in mind, we decided to look at a more flexible and general AI solution, leading us to our second experiment.

Experiment Two: GPT-4 Vision Preview

Our second experiment shifted towards the cutting edge of AI technology: the GPT-4 Vision Preview. This AI model promised to understand and interpret high-fidelity images exported from Figma, offering a more intuitive approach to code generation.

We started with a simple Python script to feed our Figma exports into the AI. The results were astonishing. With carefully crafted prompts, we instructed the AI to generate TypeScript code using React.js and the Material-UI component library, focusing on producing well-structured, maintainable components.

The AI’s output was impressively accurate, requiring far less tweaking than the code from traditional tools. By refining our prompts, we could direct the AI to include extension points for custom logic and to adhere to specific library versions, ensuring the code worked right out of the gate.

Our success with GPT-4 Vision was so impressive that we created our own Visual Studio Code extension. This allowed our team to generate TypeScript code directly from JPG exports, significantly accelerating our development process.

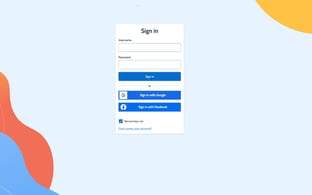

Figma on the left, generated code on the right

The Future of UI Code Generation at Propel

This breakthrough with GPT-4 Vision has paved the way for a more integrated and efficient code generation process at Propel. It represents not just a step, but a leap forward in our continuous quest to refine and enhance our development workflows.

Our experiments have led us to an important realisation: while off-the-shelf products offer convenience, they currently lack the maturity to fully understand and convert our UI designs into code effectively. However, the capabilities of advanced AI models like GPT-4 Vision have not just met but exceeded our expectations, demonstrating that with good prompting describing your technical environment, GPT 4 has an impressive understanding of design and code generation.

As we continue to explore the frontiers of AI in software development, we invite you to join us on this exciting journey. The future is bright, and at Propel Ventures, we’re just getting started. Stay tuned for more insights as we propel forward into the next generation of software development.